2010-11-28 Why Do We Need More Wikileaks and Cryptome

Why Do We Need More WikiLeaks and Cryptome ?

In the past months, there were many articles in favor (Foreign Policy (2010-10-25), Salon (2010-03-17)) or against (Washington Post (2010-08-02), Jimmy Wales "WikiLeaks Was Irresponsible" (2010-09-28) or Wikileaks Unlike Cryptome) or neutral (New Yorker (2010-06-07)) concerning WikiLeaks. Following public or private discussions about information leaking website like WikiLeaks? or even about the vintage Cryptome website, I personally think that the point is not about supporting or not a specific leaking website but more supporting their diversity.

Usually the term "truth" mentioned at different places when talking about "leaking website" but they just play a role to provide materials to build your own "truth". And that's the main reason why we need more "leaking website", you need to have measurable and observable results just like for a scientific experiment. Diversity is an important factor, not only in biology, but also when you want to build some "truth" based on leaked information. Even if the leaked information seems to be the same raw stream of bytes, the way to disclose it is already a method on interpretation (e.g. is it better to distribute to the journalists 4 weeks before? or is it better to provide a way for everyone to comment and analyze at the same time all the leaked information?). As there is no simple answer, the only way to improve is to try many techniques or approaches to find you by yourself what's the most appropriate.

What should we expect for next generation "leaking website"?

I don't really know but here is some thoughts based on reading from HN or some additional gathered from my physical and electronic readings.

- Collaborative annotation of the leaked information (e.g. relying on the excellent free software Annotator from Open Knowledge Foundation)

- Collaborative voting of leaked information, when you have a list of contributors annotating regularly, you may derive a list of voters for the information to leak or not information. This could be useful when there are doubts about sources for example.

- Collaborative distribution. A lot of the existing site are based on a centralized platform sensible to easy shutdown. (an interesting HN discussion about distributed content for WikiLeaks where another free software like Git could play a role too.)

We are just at the beginning of a new age of information leakage that could be beneficial for our societies. But the only way to ensure the benefit, we have to promote a diversity and not a scarcity of those platforms.

Update 20101202: It seems that some former members of WikiLeaks? decided to make an alternative platform (source Spiegel). Diversity is king and especially for interpretation of leaked information.

2010-10-24 Open Access Needs Free Software

The "Open Access Movement" depends on Free Software

The past week was the Open Access Week to promote the open access to research publication and to encourage the academia to make this as a norm in scholarship and research. The movement is really important to ensure an adequate level of research innovation by easing the accessibility to the research papers. Especially to avoid editor locking where all the research publications when they are not easily accessible and you are forced to pay an outrageous price to just get access. I think Open Access is an inevitable way for scientific research in the future even if Nature (a non-Open Access publication) disagrees.

But there is an interesting paradox in the open access movement that need to be solved especially if it want to preserve their existence on the long run. The access must go further than just the access to the papers but to the infrastructure permitting the operation of open access. As an example, one of the major open access repository called arXiv where physics, chemistry or computer science open access papers are stored. arXiv had some funding difficulties in late 2009. What happens to those repositories when they run out of funding? A recent article in linuxFR.org about open access forgot to mention about the free software aspect of those repositories? Why even promoters of free software forget to mention about the need of free software infrastructure for open access repositories? Where is the software back-end of HAL (archive ouverte pluridisciplinaire) or arXiv.org?

Here is my call:

- Open access repository must rely on free software to operate and to ensure long-term longevity

- Open access repository must provide a weekly data set including submitted publications and the linked metadata to ensure independent replication (where community could help)

Open access is inspired by the free software movement but somehow forgets that its own existence is linked to free software. Next time, I see and I enjoy a new open access project in a specific scientific field. I will ask myself about their publication repository and its software.

2010-08-15 Free Software Is Beyond Companies

Free Software Is Beyond Companies

After the recent Oracle to dismiss their free software strategies, there is always this discussion about free software and its viability in large corporation. But I strongly believe that the question is not there. The question is not the compatibility of free software with large corporations or some business practices. What is so inherently different in free software is the ability to provide free/convivial1 "tools" (as described by Ivan Illich) for everyone including large corporation.

In the recent GNOME Census, a lot of news articles, show the large or small contribution of various companies. But the majority of contributions are still done by volunteers and some are paid by small or large corporation. This doesn't mean that the company behind the funding of the author is always informed of the contribution and that the company is doing that for the inner purpose of free software.

Another interesting fact is free software authors always tend to keep "their" free software with them when moving from one company to another one. Free software authors often use companies as a funding scheme for their free software interest. Obviously companies enjoyed that because they found a way to attract talented people to contribute directly/indirectly to the company interests. But when the mutual interest is going away, authors and companies are separating. It's usually when you see forks appearing or/and corporations playing different strategies (e.g. jumping into aggressive licensing or stopping their open technological strategy).

Is that bad or good for free software? I don't know but this generates a lot of vitality into the free ecosystem. Meaning that free software is still well alive and contributors keep working. But this clearly show the importance of copyright assignment (or independent author copyright) and to be sure that the assignment is always linked with the interest of the free society to keep the software free.

Associated reading (EN) : Ivan Illich, Tools For Conviviality

Lecture pour en savoir plus (FR) : La convivialité, Ivan Illich (ISBN 978-2020042598)

2010-06-19 Searching Google From The Command Line

(shortest path for) Searching Google From the Command Line

Looking at the recent announce from Google about their "Google Command Line Tool", this is nice but missing a clear functionality : searching Google… I found various software to do it but it's always relying on external software or libraries and not really the core Unix tools. Now can we do it but just using standard Unix tools? (beside "curl" but this can be even replaced by a telnet doing an HTTP request if required)

To search google from an API, you can use the AJAX interface to do the search (as the old Google search API is not defunct). The documentation of the interface is available but the output is JSON. JSON is nice for browser but again funky to parse on command line without using external tools like jsawk. But it's still a text output, this can be parsed by the wonderful awk (made in 1977, a good year)… At the end, this is just a file with comma separated values for each "key/value". After, you can through away the key and you display the value.

curl -s "http://ajax.googleapis.com/ajax/services/search/web?v=1.0&start=0&rsz=large&q=foo" | sed -e 's/[{}]/''/g' | awk '{n=split($0,s,","); for (i=1; i<=n; i++) print s[i]}' | grep -E "("url"|"titleNoFormatting")" | sed -e s/\"//g | cut -d: -f2-

and the output :

http://en.wikipedia.org/wiki/Foobar Foobar - Wikipedia http://en.wikipedia.org/wiki/Foo_Camp Foo Camp - Wikipedia http://www.foofighters.com/ Foo Fighters Music ...

Now you can put the search as a bash function or as an alias (you can replace foo by $1). Do we need more? I don't think beside a Leica M9…

Update Saturday 19 June 2010: Philippe Teuwen found an interesting limitation (or a bug if you prefer) regarding unicode and HTML encoding used in titles. Sometimes, you may have garbage (especially with unicode encoding of ampersand HTML encoding) in the title. The solve the issue, Philippe piped the curl output in json_xs and in a recode html. This is solving the issue but as my main goal is to avoid the use of external tools. You can strip them violently with an ugly "tr" or "grep [[:alpha]]". I'm still digging into "pure core" Unix alternative…

Tags: google command-line unix internet search

2010-05-24 Information Wants To Be Free Is Becoming An Axiom

"Information wants to be free" is now becoming an axiom

The last article "Saying information wants to be free does more harm than good" from Cory Doctorow on guardian.co.uk rings a bell to me. It seems that we still don't often understand what's the profound meaning of this mantra or expression is. One of the origin for this expression could be around the fifties from Peter Samson claimed : Information should be free.

When Steven Levy published his book : "Hackers, heroes of the computer revolution", the chapter "The Hacker Ethic" includes a section called "All information should be free" in reference to The Tech Model Railroad Club (TMRC) where Peter Samson was a member. The explanation made by Steven Levy:

The belief, sometimes taken unconditionally, that information should be free was a direct tribute to the way a splendid computer, or computer program, works: the binary bits moving in the most straightforward, logical path necessary to do their complex job. What was a computer but something which benefited from a free flow of information? If, say, the CPU found itself unable to get information from the input/output (I/O) devices, the whole system would collapse. In the hacker viewpoint, any system could benefit from that easy flow of information.

A variation of this mantra was made by Stewart Brand in a hacker conference in 1984 :

On the one hand information wants to be expensive, because it's so valuable. The right information in the right place just changes your life. On the other hand, information wants to be free, because the cost of getting it out is getting lower and lower all the time. So you have these two fighting against each other

We could even assume that the modified mantra was a direct response to Steven Levy's book and to his chapter "The Hacker Ethic" (ref. mentioned in a documentary called "Hackers - Wizards of the Electronic Age"). The mantra or the aphorism was used in past twenty-five years by a large community. The application of the mantra by the GNU project is even mentioned in various documents including again the book from Steven Levy.

Regarding the last article from Cory Doctorow, why he doesn't want that make an emphasis on the information but on people's freedom. I agree to that point of view but the use of "information wants to be free" is a different matter. I want to take it on a different angle, information is not bound to physical properties like the physical objects are. By the effect of being liberated from the physical rules, information tends to be free.

Of course, this is not real axiom but it's not far away from being an axiom. If you are looking for the current issues in "cyberspace", this is always related to that inner effect of information. Have you seen all the unsuccessful attempts to make DRM (digital restrictions management or digital rights management depending of your political view) working? All attempts from the dying music industry to shut-down OpenBitTorrent or any open indexing services? or even the closing of newzbin where at the same time the source code and database leaked? or the inability to create technology to protect privacy (the techniques are not far away from the missing attempts done by DRM technologies)?

Yes, "information wants to be free", just by effect and we have to live with that fact. I personally think it is better to abuse this effect than trying to limit the effect. It's just like fighting against gravity on earth…

Tags: freedom information free freesoftware

2010-05-03 Information Action Ratio

Information-action Ratio or What's Your Opinion About Belgian Politics?

Listening to the Belgian news is often a bit surreal (this makes sense in the country of surrealism) as they talk about problems that we don't really care or this is not really impacting the citizen. Even if the media are claiming that Belgian politics (and by so the crisis just created by some of them) are affecting our life. But if you are listening to every breaking news, this is a majority of useless information that you can't use to improve your life or the society. Neil Postman described this in a nice concept : Information-action Ratio:

In both oral and typographic cultures, information derives its importance from the possibilities of action. Of course, in any communication environment, input (what one if informed about) always exceeds output (the possibilities of action based on information). But the situation created by telegraphy, and the exacerbated by later technologies, made the relationship between information and action both abstract and remote.

You can replace telegraphy by your favourite media but this is a real issue of the current news channel (e.g. television, radio,…). The information is so distant from what you are doing everyday. We can blame our fast channel of communication being very different compared to a book or an extensive article on a specific subject where the information is often well organized and generating thinking (that can lead to action). Why are we listening to information that we don't care? Why are we giving so much importance to that useless information? I don't have a clear answer to that fact. I'm sure of at least something, instead of listening/viewing useless information in the media like Belgian politics, I'll focus more on the media (including books) increasing my information-action ratio.

If you want to start in that direction too, I'll recommend Amusing Ourselves To Death written by Neil Postman.

Tags: contribute media belgium

2010-02-14 Contribute Or Die

Contribute or Die?

In the past years, I participated to plenty of meetings, conferences or research sessions covering technical or even non-technical aspects of information security or information technology. When looking back and trying to understand what I have done right or wrong and especially, what's the successful recipe in any information technology project. I tend to find a common point in any successful (or at least partially successful) project : making concrete proposals and build them at the very early stage of the project.

A lot of projects have the tendency to become the meeting nightmare with no concrete proposal but just a thousand of endless critiques of the past, present and future. Even worst, those projects are often linked to those "best practices" in project management with an abuse of the broken Waterfall model. After 3 or 4 months of endless discussion, there is no single prototype or software experiment just a pile of documents making happy any committee but also many angry software engineers.

If you are looking at successful (free and non-free) software projects or favourable standardization processes, it's always coming from real and practical contribution. Just look at the IETF practices compared to the "design by committee" methodology, practical approaches are usually winning. Why? because you can see the pitfalls directly and reorient the project or the software development very early.

There is no miracle or silver bullet approaches for having successful project but the only way to make a project better is to make errors as early as possible. It's difficult or near impossible to see all errors in those projects until you'll get your hand dirty. This is the basis of trial-and-error, you have to try to see if this is an error or not. If you don't try, you are just lowering your chance to hit errors and improve your project, software or even yourself.

So if you are contributing, you'll make error but this is much more grateful than sitting on a chair and whining about a project sheet not updated or having endless discussion. There is an interesting lightning talk at YAPC::EU in 2008 : "You aren't good enough" explaining why you should contribute to CPAN. I think this is another way to express the same idea : "contribute, make code, prototype and experiment" even if this is broken, someone else could fix it or start another prototype based on your broken one. We have to contribute if we want to stay alive…

Tags: contribute startup innovation

2010-01-01 Sharing e-Books with your Neighbours

Sharing e-Books with your Neighbours

or why I made Forban : a small free software for local peer-to-peer file sharing

Beside my recent comparison between e-books and traditional book, I own some e-books along with a huge collection of paper-based books. With books, sharing is commonly used among book-owners or bibliophile. The fact of sharing books usually produces an interesting effect doing cross-fertilization of your knowledge. This is applicable to any kind of books and this opens your mind to new books, authors, ideas or even perspective to your life. Sharing books is a common and legally allowed activity, there are even website to support the sharing of physical books like BookCrossing. With the recent publisher's move to sell (or should I say "to rent") e-books to readers or bibliophile, it looks like the sharing of books is trapped in something difficult or impossible to conceive for any editor or publisher. Even the simple fact of moving your e-Books to one reader to another reader (at the end, just moving your book to another bookshelf) is trapped on an eternal tax of purchasing again and again the e-books. This issue of eternal tax on e-books has been clearly explained in "Kindle Total Cost of Ownership: Calculating the DRM Tax". The technology of restriction on e-books introduces many issues and threats against the sharing or access to the knowledge. The restrictive DRM "pseudo-technology" on e-books is the application of the worst nightmare explained in "The Right to Read" written in 1997 by Richard Stallman and published in Communications of the ACM (Volume 40, Number 2). I'm wondering what we can do to counter balance this excessive usage of restrictive technology on the books often defined as "the accessible support of knowledge for the human being".

To support the phrase "Think Globally, Act Locally" with the recent threats against books sharing, I tried to come with something to help me to share books locally without hassle with friends, books fans or neighbours. I created Forban to share files easily on the local network. The software is a first implementation of the Forban protocols : fully relying on traditional HTTP with a simple UDP protocol for broadcasting and announcing the service on the local network. The protocols are simple in order to help other to implement other free or non-free software to support the protocol and introduce the local file sharing as a default functionality (a kind of default social duty for promoting local sharing). Forban is opportunist and will automatically copy all files (called loot ;-) announced by other Forban on the local network. By the way, Forban used internet protocols but it is not using Internet (a subtle difference but an important one especially regarding law like HADOPI).

Happy new year and happy sharing for 2010.

Tags: books freedom sharing freesoftware

2009-11-08 Random Collection Number 1

2009-11-08 Random Collection #1

While cleaning up my desks or hiking in the forest, I got plenty of ideas for a potentially interesting blog post but it's often too small to make a complete post. Some actions or comments made on physical and virtual communities can be also "blogged" but when added in micro-blogging are loosing their context due to the limitation of 140 characters (including URLs, yes, URLs should not be accounted in the 140 characters of a micro-blogging platform, this could be part of another blog post). You can claim that is due to my friendly disorder of loving information mess or even better, due to information being connected to the other in some ways.

[flickr] Getty Images proposal is just a trap to kill CC-licensed works and especially direct interactions between author-user

I recently discovered the "offer" from Getty Images made to flickr users to upload some photos in their group. If they select your photos, they could be in their stock. At first, it looks very good but digging in the FAQ of callforartists group…

There is a chance one of your Creative Commons-licensed photos may catch the eye of a perceptive Getty Images editor. You are welcome to upload these photos into the Flickr collection on Getty Images, but you are contractually obliged to reserve all rights to sale for your work sold via Getty Images. If you proceed with your submission, switching your license to All Rights Reserved (on Flickr) will happen automatically.

If you’re not cool with that, that’s totally cool. It just means that particular photo will need to stay out of the Flickr collection on Getty Images.

Hey guys, this is not cool. I wrote my arguments against the scheme on their groups, flickr helped artists to get rid of some stock photography monopolies but now they are coming back by removing (if you put your works in their group) CC-licensed from flickr.

[digital archiving] Digitalizing books or fragile document

During my benevolent work for the preservation of the intangible work in our region (in french), I made some comments regarding the digitalization of books (in french). and I discovered a free software/hardware project called Decapod to provide an easy and low-cost hardware solution to digitalize books. I really would like to test it or find similar project. A good step for making digital archiving and preservation more accessible while using free software and free hardware.

[copyright delirium] ACTA confidential document leaked but where?

Wikileaks released a copy of the initial ACTA draft agreement between US and JP but that was in late 2008, called revision 1 - June 9,2008. Plenty of recent articles about a leakage of the latest version of the ACTA agreement but I can't find the leaked document. The document from EU is a summary of the content with the references but not the real document. I suppose that from June 2008, there are other revisions especially the one including the Internet part. The process of redaction for such legal is scary especially knowing that will be the ground for a treaty, an EU directive and after various national transpositions. Can someone distribute the latest version of the ACTA agreement?

2009-10-25 An e-Book Reader Is Not A Book

An E-Book (Reader) Is Not A Book

or why I'm not happy with e-book readers and still read paper-based books

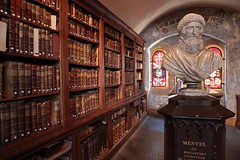

Every year at home, we are reading and stacking more than 3 shelves of books. As you can see on the above picture, I'm forced to stack books on the top of bookshelves (here on the top of a classical "Billy" from a well-known Swedish supplier). Looking at my own use of books, electronic book seemed to be a nice opportunity. So I tried various e-books reader (on a 2+ years period) but without any success or even positive experience. I won't make a review of all the readers I tried from free software e-book reader to proprietary physical e-book reader. While testing those devices, I took some notes describing what was the issues encountered while reading electronic books compared to the reading of traditional books. I summarized my impression (at the end, this is just my own experience of reading so this is quite subjective). My impression are based on additional cost added by e-book reader compared to traditional printed books.

the set-up cost

Book doesn't need time to boot, set-up, charge battery, refresh page, index, recover or even crash. If you have 5 minutes while waiting for your friends, you'll need to open the book and start reading. With an electronic book, this is not the case. In the best case, the e-book is ready but you'll see that the battery is going low and you are stuck in your car waiting for someone without the possibility to read (and worst, you forgot the charger for the car. This happens). Right now, nothing beats a paper book on the set-up…

the social cost

An electronic book limits social interaction in the tangible and physical world. One of the classical example, if you are reading a book in a train, I can't count how many times this was the opportunity for starting a discussion. Often just because the traveller next to you was trying to read on the cover what you are reading… With an electronic book, this is a limitation factor for starting a conversation : how can the traveller read the cover of your electronic book? Today, this is not possible with an electronic book. The case is valid in libraries, book-store or at home while visitors are negligently looking at your bookshelves. You can socialize with books on Internet but shutting down your local social interaction by moving from books to e-book is not an option to me.

the comfort cost

I'm reading everywhere but my bed is one of the first place where I'm reading. Sorry but a computer or device in a bed is something strange (even a traditional book can be difficult). Reading a screen before sleeping is like having a light therapy just before sleeping. The traditional book is not emitting light, an indirect light is used to read what's written on the paper. So the comfort of a paper book is unbeatable especially while reading in your bedroom.

One of my great pleasure is to read a book while drinking tea. It happened that I spilled some tea on a book but the effect is fundamentally different with an electronic device. Water (and other liquid) is dangerous for the books but it's worst for an electronic device.

the annotation cost

I know it's bad but I'm doing annotations (margin annotation, highlighting…) in my books and often going back to those annotations. You'll need a pencil and that's it. For electronic books, this is difficult (sometime impossible the way you want it) and to query back your annotation is also a pain.

Conclusion and still living with my paper books

The cost of using electronic books is high and not bringing that's much value (at least to me) compared to a traditional paper-based book. The only useful usage of an electronic book is when you'll need a reference book and doing a lookup for a word. Beside being someone using and creating technologies, I'm still more convince to read and use all those old books. The ecological impact of printing books is high but starts to be more and more limited. It's really difficult for me to find some real and concrete advantages of using electronic books. Maybe the main advantage is the lack of bookshelves, but I would be a bit nostalgic when our guest are killing their neck by reading the title. So I'll continue to purchase new bookshelves… at least for the next few years.

Footnotes:

1. Convivial is used in the sense of tools that you have access and can use by yourself independently. For more information, Ivan Illich, Tools For Conviviality